Technical discussion and idea-workout thread for Snuggles, The Wolf, a robot intended for emotional support interaction.

Discussion of the project itself is welcome; ridicule or other negative feedback about the idea itself, etc is not.

The project concept is pretty complex, so reading all of the technical posts is pretty much required to understand what's actually going on, and to know what is going to be required. (there are certain to be side-discussion posts here and there, but it should be quickly obvious which are those and which are technically related so you can skip them )

)

Primary purpose: The last decade or so, I've seen how many people (including myself for a short while after the housefire) out there who for one reason or another can't have an actual animal companion, but could benefit emotionally from having the interaction with one, so I've been very slowly learning bits and pieces about robotics to develop an open-source one that those capable could build, and those not capable could have someone build for them.

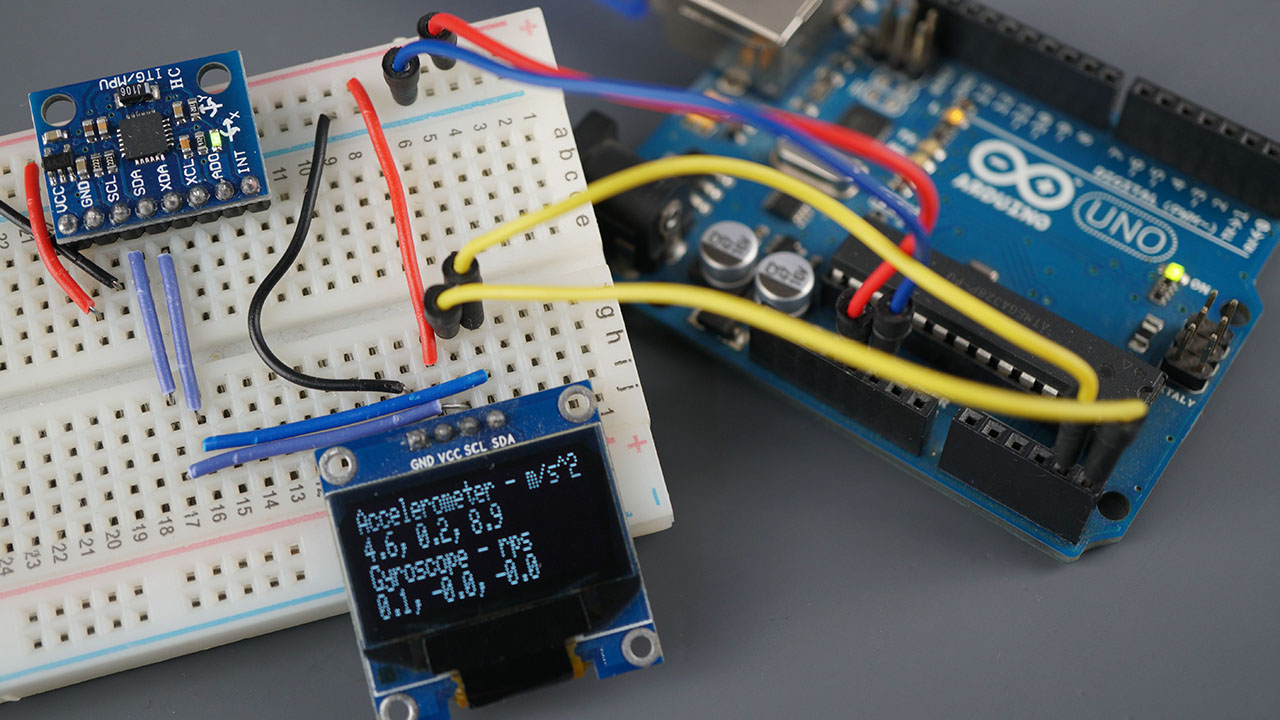

The intent is to be modular, so only those capabilities desired have to be built. For instance, if all one wants is it to respond to touch or hugs by making comforting cute sounds, just build the Proprioception Network and Sound Modules, and include those in the software on the MCUs.

The following posts will start describing the technical requirements and ideas for solving some of these, and start discussing how to implement these things.

Even with all my research, I still know very little about coding, robotics, etc., so I welcome all assistance with these things.

Readers may skip the next part as it is just background for why I need (not just want) to do this type of project, and doesn't directly affect any of the technical aspects.

I started this thread now because I have begun buying some really cheap robotics parts to experiment with and learn from, and also now have a 3D printer to make quite a bit of fabrication much easier than it previously would have been, and will be posting my results and experiments here for those wishing to learn (or just follow along and laugh internally at my naivete in these things).

Subsections of the project descriptions in following posts.

***********************************************************

A short non-technical background for the project (not necessary to read if you just want to help with it, but useful to understand why some parts of it have to exist or do what they must do, and why I am doing it at all):

I've had dogs nearly all my life, usually big ones (like the Saint Bernards I've had several of for the last decade-and-a-half), but like all living things, they die eventually. Most of the time I have more than one so I can get thru the loss of another by focusing on the remaining one(s), but there have been times when I did not have another or lost all of them at once, and had none to help me thru those times.

I have also always had homemade "wolfys": roughly wolf-shaped large plushy-kind-of-things to snuggle up with to help me sleep, or when I am very stressed out, as I am not a normal person and don't connect well with other people (I don't really understand them, and it's usually mutual). "Doctors" call it Asperger's or Autistic Spectrum; my brother calls it Neurodivergent. But it leaves me with a hole to fill, and not all dogs like the snuggles much or want to stay with me all the time (like JellyBeanThePerfectlyNormalSchmoo I have now). It's great when they do, but I wouldn't want to force them to just to help *me* out....So for those times, I keep the wolfys around--there's usually just one, but sometimes I have a second one to experiment with different ways of making one that would be better in some way without altering the one in use.

They're usually just minimally-poseable roughly-wolf-looking about-lifesized shapes, made more for how they feel than how they look. Some of you may have heard of weighted blankets, body pillows, etc., that are intended to help those that are comforted by the feeling of weight on them, or of holding something, etc. There are many variations on these; the wolfys are just another more complex version of those.

Wolf-shaped because I've always been interested in wolves since I was a kid, and I loved how I could see them snuggle up with each other (sometimes quite aggressively), even before I ever had a dog. So I started out with some big plushy my parents probably got for me (dont' remember, was really little), and as that wore out and I as I grew up I started making my own out old clothes and pillows and plushies from thrift stores, etc. At some point I got a cheap MP3 player with looping function and recorded sleeping-breathing noises into it, and put that and a speaker inside. But I've wanted some sort of interaction for a while now, and so started this project. (I first began the idea of doing it in the 1990s but robotics was too expensive to get into...nowadays it's practically dirt cheap, and even I can afford it).

I rarely talk about them because so many people just make fun of me as if I was a child, but I often feel like one and am always stressed out by other people, and my dayjob involves constantly interacting with many people, and then there's all those I interact with on the internet. Some of all these people are good, many are alright, but some are just not very nice people, and I have to deal with them anyway, with (as my mom once put it) my Lee Press-On Smile. Then I can freak out when I'm home and let the wolfy calm me down. (At work I wear a small wolfy plushie on my suspenders to help me with the most stressful moments each day...I wish I could keep the fullsize one with me (a real dog would be even better but that's simply not possible even if JellyBean (or whichever one I had at the time) were inclined to be snuggled and hugged at random times, and could stay with me all the time without "being a dog")).

(sometimes I have a dog that can do that, like Kirin did, or Lady a couple decades before, but it's rare).

Some pictures of the most recent versions, with one on the couch for scale. (I don't have pics of any older ones)

Discussion of the project itself is welcome; ridicule or other negative feedback about the idea itself, etc is not.

The project concept is pretty complex, so reading all of the technical posts is pretty much required to understand what's actually going on, and to know what is going to be required. (there are certain to be side-discussion posts here and there, but it should be quickly obvious which are those and which are technically related so you can skip them

Primary purpose: The last decade or so, I've seen how many people (including myself for a short while after the housefire) out there who for one reason or another can't have an actual animal companion, but could benefit emotionally from having the interaction with one, so I've been very slowly learning bits and pieces about robotics to develop an open-source one that those capable could build, and those not capable could have someone build for them.

The intent is to be modular, so only those capabilities desired have to be built. For instance, if all one wants is it to respond to touch or hugs by making comforting cute sounds, just build the Proprioception Network and Sound Modules, and include those in the software on the MCUs.

The following posts will start describing the technical requirements and ideas for solving some of these, and start discussing how to implement these things.

Even with all my research, I still know very little about coding, robotics, etc., so I welcome all assistance with these things.

Readers may skip the next part as it is just background for why I need (not just want) to do this type of project, and doesn't directly affect any of the technical aspects.

I started this thread now because I have begun buying some really cheap robotics parts to experiment with and learn from, and also now have a 3D printer to make quite a bit of fabrication much easier than it previously would have been, and will be posting my results and experiments here for those wishing to learn (or just follow along and laugh internally at my naivete in these things).

Subsections of the project descriptions in following posts.

***********************************************************

A short non-technical background for the project (not necessary to read if you just want to help with it, but useful to understand why some parts of it have to exist or do what they must do, and why I am doing it at all):

I've had dogs nearly all my life, usually big ones (like the Saint Bernards I've had several of for the last decade-and-a-half), but like all living things, they die eventually. Most of the time I have more than one so I can get thru the loss of another by focusing on the remaining one(s), but there have been times when I did not have another or lost all of them at once, and had none to help me thru those times.

I have also always had homemade "wolfys": roughly wolf-shaped large plushy-kind-of-things to snuggle up with to help me sleep, or when I am very stressed out, as I am not a normal person and don't connect well with other people (I don't really understand them, and it's usually mutual). "Doctors" call it Asperger's or Autistic Spectrum; my brother calls it Neurodivergent. But it leaves me with a hole to fill, and not all dogs like the snuggles much or want to stay with me all the time (like JellyBeanThePerfectlyNormalSchmoo I have now). It's great when they do, but I wouldn't want to force them to just to help *me* out....So for those times, I keep the wolfys around--there's usually just one, but sometimes I have a second one to experiment with different ways of making one that would be better in some way without altering the one in use.

They're usually just minimally-poseable roughly-wolf-looking about-lifesized shapes, made more for how they feel than how they look. Some of you may have heard of weighted blankets, body pillows, etc., that are intended to help those that are comforted by the feeling of weight on them, or of holding something, etc. There are many variations on these; the wolfys are just another more complex version of those.

Wolf-shaped because I've always been interested in wolves since I was a kid, and I loved how I could see them snuggle up with each other (sometimes quite aggressively), even before I ever had a dog. So I started out with some big plushy my parents probably got for me (dont' remember, was really little), and as that wore out and I as I grew up I started making my own out old clothes and pillows and plushies from thrift stores, etc. At some point I got a cheap MP3 player with looping function and recorded sleeping-breathing noises into it, and put that and a speaker inside. But I've wanted some sort of interaction for a while now, and so started this project. (I first began the idea of doing it in the 1990s but robotics was too expensive to get into...nowadays it's practically dirt cheap, and even I can afford it).

I rarely talk about them because so many people just make fun of me as if I was a child, but I often feel like one and am always stressed out by other people, and my dayjob involves constantly interacting with many people, and then there's all those I interact with on the internet. Some of all these people are good, many are alright, but some are just not very nice people, and I have to deal with them anyway, with (as my mom once put it) my Lee Press-On Smile. Then I can freak out when I'm home and let the wolfy calm me down. (At work I wear a small wolfy plushie on my suspenders to help me with the most stressful moments each day...I wish I could keep the fullsize one with me (a real dog would be even better but that's simply not possible even if JellyBean (or whichever one I had at the time) were inclined to be snuggled and hugged at random times, and could stay with me all the time without "being a dog")).

(sometimes I have a dog that can do that, like Kirin did, or Lady a couple decades before, but it's rare).

Some pictures of the most recent versions, with one on the couch for scale. (I don't have pics of any older ones)

Last edited: